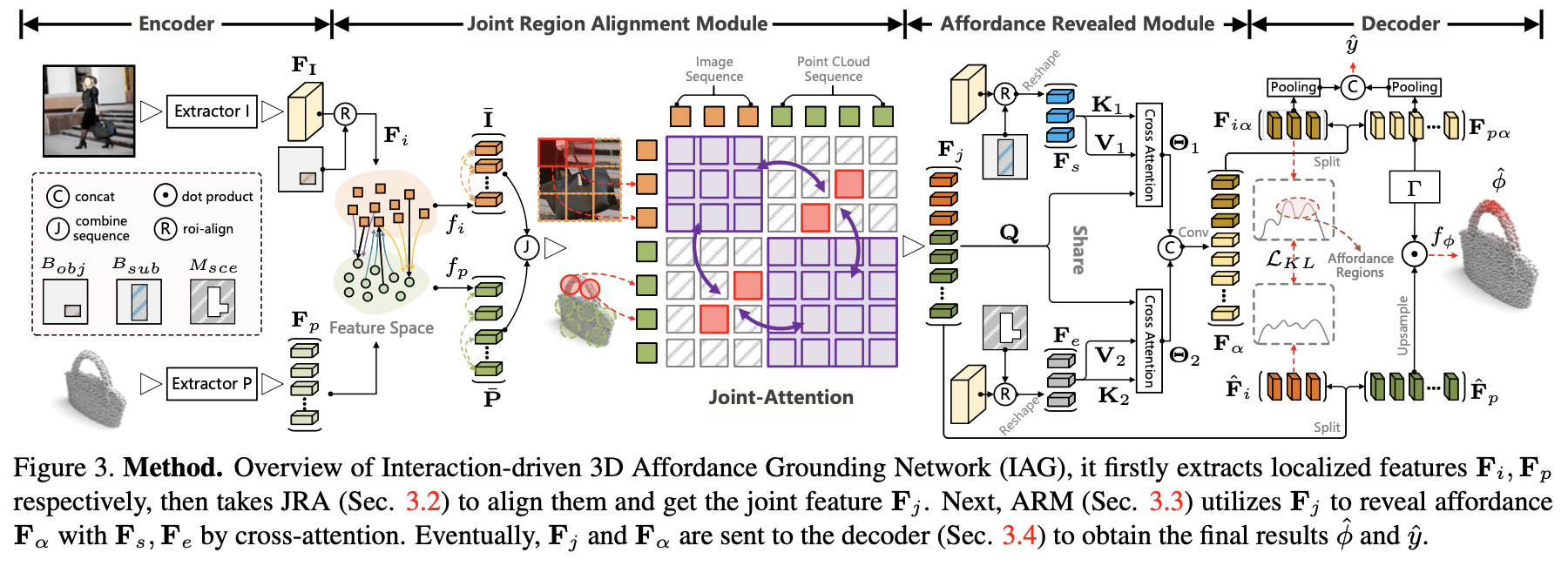

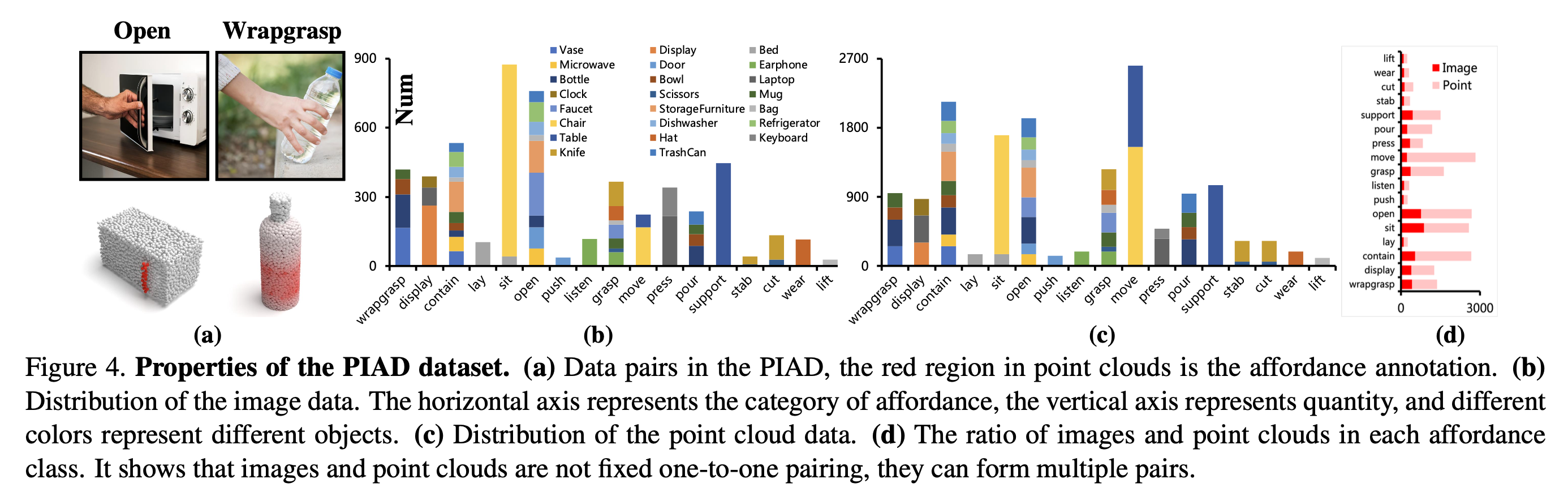

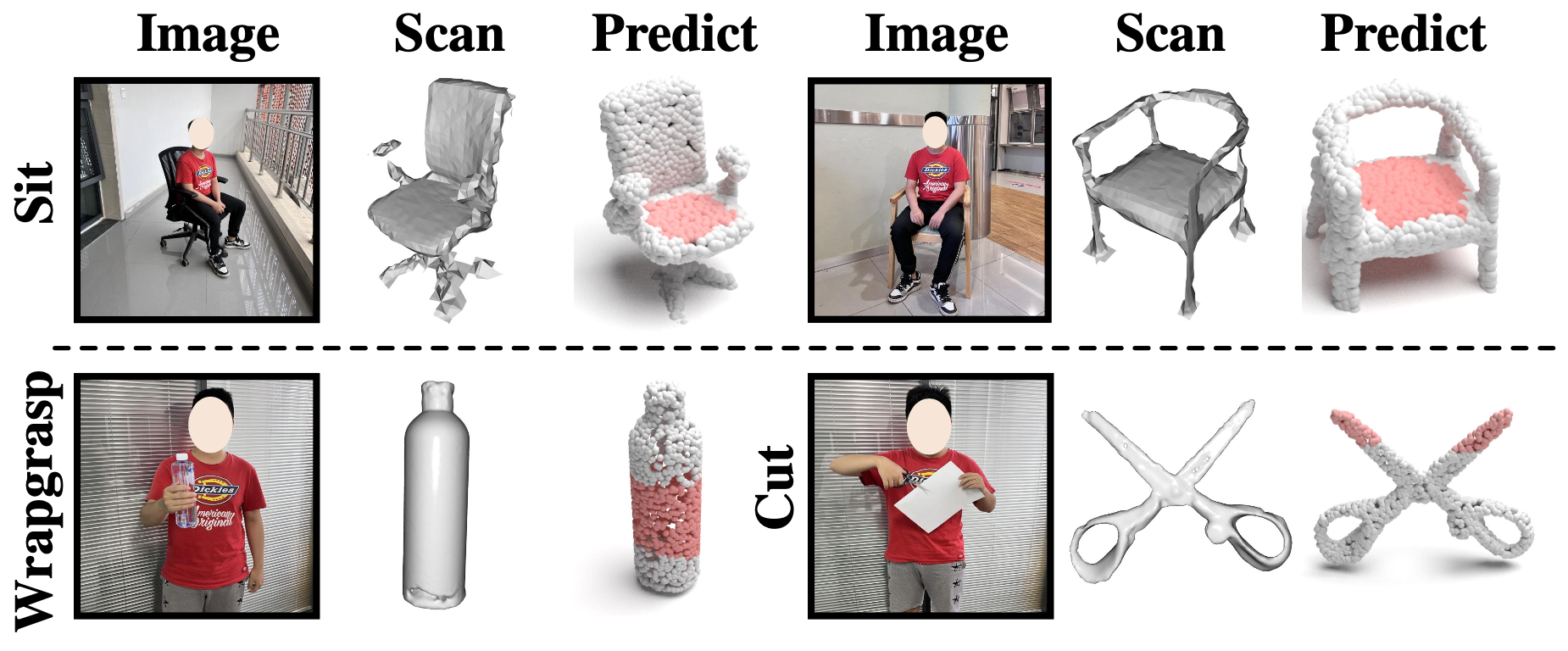

Grounding 3D object affordance seeks to locate objects' ``action possibilities'' regions in the 3D space, which serves as a link between perception and operation for embodied agents. Existing studies primarily focus on connecting visual affordances with geometry structures, e.g., relying on annotations to declare interactive regions of interest on the object and establishing a mapping between the regions and affordances. However, the essence of learning object affordance is to understand how to use it, and the manner that detaches interactions is limited in generalization. Normally, humans possess the ability to perceive object affordances in the physical world through demonstration images or videos. Motivated by this, we introduce a novel task setting: grounding 3D object affordance from 2D interactions in images, which faces the challenge of anticipating affordance through interactions of different sources. To address this problem, we devise a novel Interaction-driven 3D Affordance Grounding Network (IAG), which aligns the region feature of objects from different sources and models the interactive contexts for 3D object affordance grounding. Besides, we collect a Point-Image Affordance Dataset (PIAD) to support the proposed task. Comprehensive experiments on PIAD demonstrate the reliability of the proposed task and the superiority of our method.

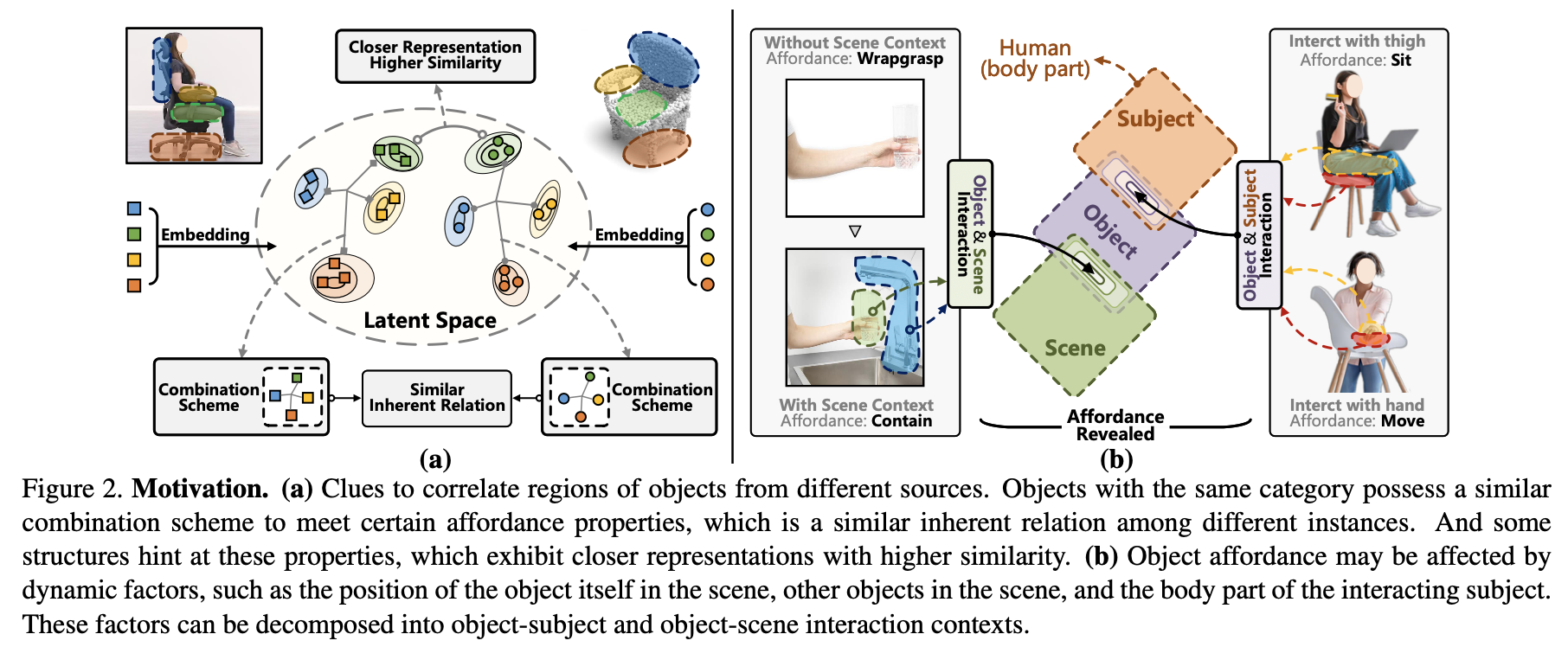

This challenging task includes several essential issues that should be properly addressed. 1) Alignment ambiguity. To ground 3D object affordance from 2D source interactions, the premise is to correspond the regions of the object in different sources. The object in 2D demonstrations and the 3D object faced are usually derived from different physical instances in different locations and scales. This discrepancy may lead to confusion about corresponding affordance regions, causing alignment ambiguity. While objects are commonly designed to satisfy certain needs of human beings, so the same category generally follows a similar combination scheme of object components to meet certain affordances, and these affordances are hinted by some structures (Fig. 2 (a)). These invariant properties are across instances and could be utilized to correlate object regions from different sources. 2) Affordance ambiguity. Affordance has properties of dynamic and multiplicity, which means the object affordance may change according to the situation, the same part of an object could afford multiple interactions, as shown in Fig. 2 (b), ``Chair'' affords ``Sit'' or ``Move'' depends on the human actions, ``Mug'' affords ``Wrapgrasp'' or ``Contain'' according to the scene context, these properties may make ambiguity when extracting affordance. However, these dynamic factors for affordance extraction can be decomposed into the interaction between subject-object and object-scene. Modeling these interactions is possible to extract explicit affordance.